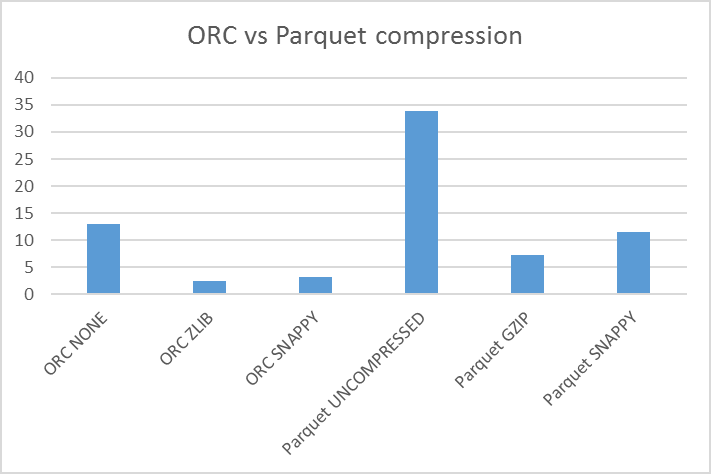

Starting with Hive 0.13, the ‘PARQUET.COMPRESS’=’SNAPPY’ table property can be set to enable SNAPPY compression. The Big SQL table created and populated in Big SQL is almost half the size of the table created in Big SQL and then populated from Hive. Since the Parquet files created with Big SQL are compressed the overall table size is much smaller. The following table shows the table size for one table using the Parquet file format when the table is populated using Big SQL LOAD HADOOP and Big SQL INSERT…SELECT vs Hive INSERT…SELECT: Big SQL LOAD Comparing Big SQL and Hive Parquet Table Sizes By default Hive will not use any compression when writing into Parquet tables. Note that the syntax is the same yet the behavior is different. The following example shows the syntax for a Parquet table created in Hive: hive> CREATE TABLE inv_hive ( trans_id int, product varchar(50), trans_dt date ) PARTITIONED BY ( year int) STORED AS PARQUET If Parquet tables are created using Hive then the default is not to have any compression enabled. This means that if data is loaded into Big SQL using either the LOAD HADOOP or INSERT…SELECT commands, then SNAPPY compression is enabled by default. When tables are created in Big SQL, the Parquet format can be chosen by using the STORED AS PARQUET clause in the CREATE HADOOP TABLE statement as in this example: jsqsh>CREATE HADOOP TABLE inventory ( trans_id int, product varchar(50), trans_dt date ) PARTITIONED BY ( year int) STORED AS PARQUETīy default Big SQL will use SNAPPY compression when writing into Parquet tables. Creating Big SQL Table using Parquet Format

#Orc snappy compression how to

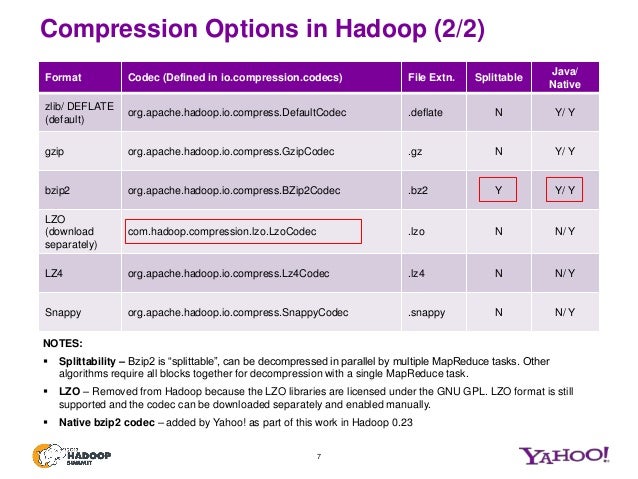

The next sections will describe how to enable SNAPPY compression for tables populated in Hive on IBM Open Platform (prior to Big SQL v5) and HortonWorks Data Platform (from Big SQL v5 and going forward). For Hive, by default compression is not enabled, as a result the table could be significantly larger if created and/or populated in Hive. When loading data into Parquet tables Big SQL will use SNAPPY compression by default. Hive tables can also be populated from Hive and then accessed from Big SQL after the catalogs are synced. This means that Big SQL tables can be created and populated in Big SQL or created in Big SQL and populated from Hive. One of the biggest advantages of Big SQL is that Big SQL syncs with the Hive Metastore. Big SQL supports table creation and population from Big SQL as well as from Hive. The distinction of what type of file format is to be used is done during table creation. Read this paper for more information on the different file formats supported by Big SQL.

0 kommentar(er)

0 kommentar(er)